AI agents aren’t dumb. They’re operating in the dark.

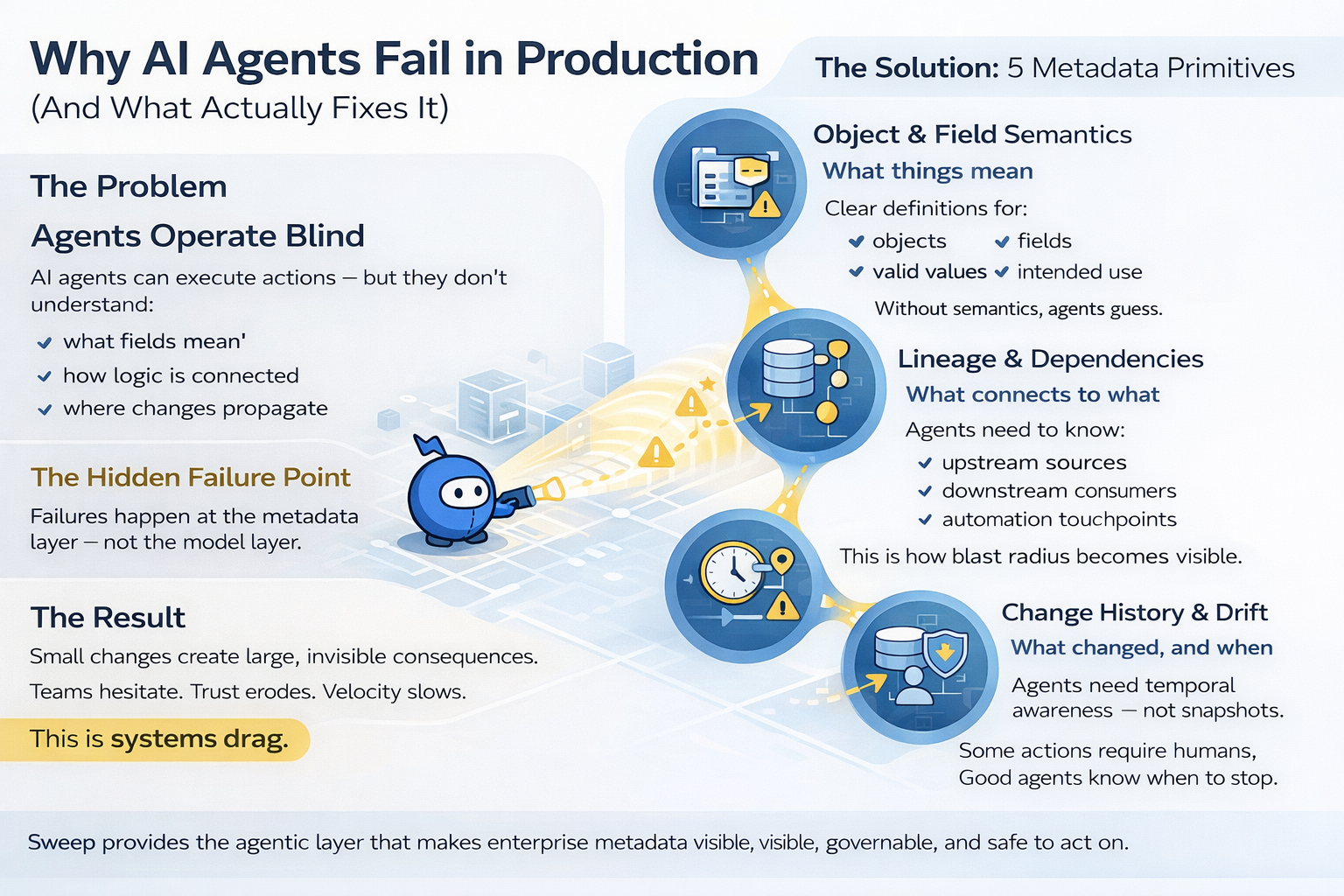

Want proof? Most enterprise AI initiatives don’t collapse at the model layer. They break much earlier, at the metadata layer — the hidden logic that defines what fields mean, how systems connect, and which actions are safe to take. When that layer is incomplete or stale, even the smartest agent is left to guess.

This piece walks through the five metadata primitives every AI agent needs to operate reliably inside real go-to-market systems. Not demos. Not sandboxes. Production. Let's turn on the light.

TL;DR

- AI agents don’t need better prompts — they need system context. Metadata is that context, but only when it’s complete, current, and connected.

- There are five foundational metadata primitives agents depend on, and missing even one introduces systems drag and unsafe automation.

- Sweep exists to make these primitives real and usable.

Why Most AI Agents Fail in Production

On paper, an agent can update a field, route a lead, or tweak a workflow like nobody's business. In reality, that same action might break downstream dashboards, violate compliance rules, trigger recursive automations, corrupt historical data, or any number of less-than-ideal outcomes.

The source of the problem is in the agent's understanding.

In platforms like Salesforce, meaning doesn’t live in rows of data. It lives in metadata — in definitions, dependencies, ownership, and constraints. When agents can't see that layer, they don't understand the system they’re operating in. They act locally but break things globally.

To address this, agents need better primitives.

Primitive #1: Object and Field Semantics

Before an agent can act, it needs to understand what things even mean. Not just labels, but intent. What a field represents, how it’s supposed to be used, and what valid versus dangerous values look like.

Without semantic metadata, fields become interchangeable strings. That’s how ARR gets overwritten by pipeline estimates, how lifecycle stages drift into nonsense, and how “temporary” fields become permanent, load-bearing dependencies.

Primitive #2: Lineage and Dependencies

Every action has a blast radius. An agent needs to know where inputs come from, what depends on them downstream, and how objects and systems are coupled.

Without that lineage, an agent can reason about intent but cannot analyze impact. It can make changes that seem small and sensible, only to trigger outages somewhere else. This is where production incidents are born — not from big moves, but from blind ones.

Lineage is how agents learn the most important skill of all: caution.

Primitive #3: Change History and Drift Signals

Agents also need a sense of time. They need to know what changed, when it changed, and how often things change.

Static metadata is a lie in extensive, fast-moving systems. Definitions drift. Fields get repurposed. Logic accretes. Without historical context, agents can’t tell whether a system is stable or volatile.

Drift is the signal agents use to decide whether to proceed, pause, or escalate. No history means no judgment.

Primitive #4: Ownership and Governance Context

Safe autonomy depends on knowing when not to act.

Agents should understand who owns a piece of logic, who approves changes, and which areas are sensitive or regulated. Think of it a guardrails for intelligence.

Ownership metadata is what tells an agent, “This is risky. Get a human.” Without it, autonomy turns reckless.

Primitive #5: Execution Constraints and Guardrails

Finally, agents need boundaries. They need to know which actions are allowed, which require review, and which are off-limits entirely.

Constraints are what turn agents from chaos engines into operators. Without them, every agent is one clever prompt away from production damage.

How These Primitives Work Together

These primitives reinforce each other. Semantics without lineage creates confidence without caution. Lineage without history creates awareness without memory. Guardrails without ownership create control without accountability.

Together, though, they form something agents actually need: clarity.

What Happens Without Them: Systems Drag

When these primitives are missing, everything slows down. Teams hesitate. Admins become gatekeepers. AI hallucinations get mislabeled as “automation bugs.” Trust erodes in the tech, and in the system.

This is systems drag — the compound interest of metadata debt. And once it sets in, every new initiative costs more than the last.

How Sweep Becomes the Agentic Metadata Layer

Sweep exists to make these primitives real, living, and usable. Not as static documentation. Not as dashboards frozen in time. But as an agentic layer that continuously maps, explains, monitors, and governs metadata across your systems.

Agents need context.

Sweep provides it. Constantly.

Want to see how we do it? You can read more about us here.