Enterprise Salesforce orgs rarely fail because they are misconfigured.

They more often fail because they have accumulated entropy: a growing gap between what the system appears to do and what it actually does come runtime.

As organizations introduce AI agents into Salesforce — agents that explain, recommend, and increasingly... act — this gap becomes a material risk. When systems are opaque, fragmented, or historically drifted, even correct AI behavior can create unintended outcomes.

To understand where this risk concentrates, we analyzed thousands of anonymized AI agent interactions across real enterprise Salesforce environments.

Each interaction was scored using the Salesforce Entropy Index, a 1–5 diagnostic scale that measures how difficult it is — for both humans and AI agents — to confidently determine system behavior.

The goal of this research is to identify where certainty breaks down in Salesforce implementations, and what that means for autonomous systems.

Key finding: Entropy is not evenly distributed

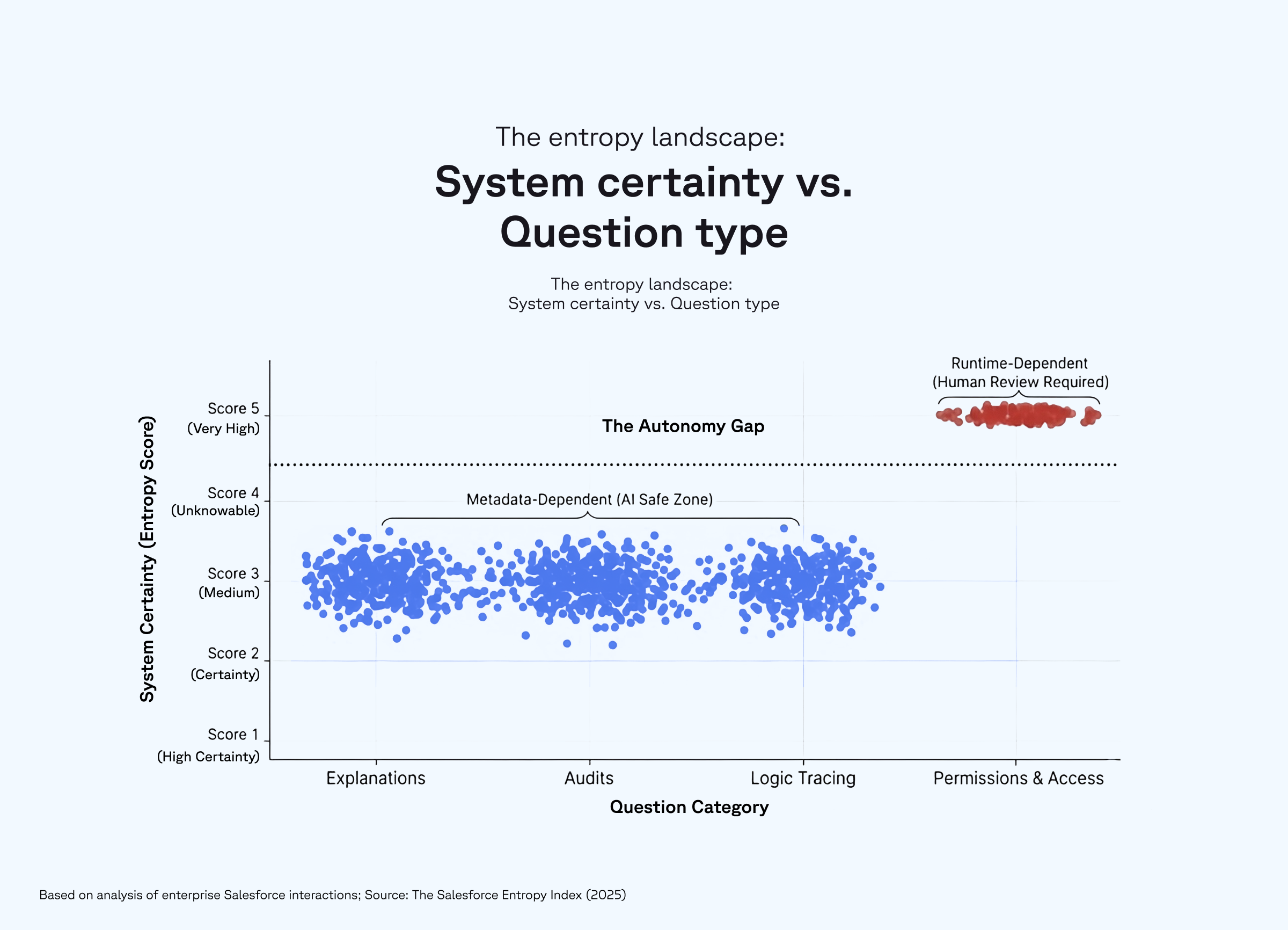

Across all interactions that we analyzed, entropy followed a shockingly clear and repeatable pattern.

Most Salesforce questions — explanations of Flows, audits of automation, dependency tracing, reporting logic, and design guidance — clustered in the Medium Entropy range (Score 3).

These questions often require synthesis across multiple metadata surfaces, but they remain legible. Both humans and AI agents can reason about them with high confidence.

By contrast, questions involving access, permissions, or authority boundaries consistently exhibited High or Very High entropy (Scores 4–5). These interactions were marked by low confidence, partial answers, or outright failure—not because the systems were complex, but because truth could not be determined from metadata alone.

This represents a categorical difference, not a gradual increase in difficulty.

Permissions-related questions are different in kind. They rely on runtime computation, overlapping authority models, and fragmented sources of truth that neither humans nor agents can conclusively reconcile without direct testing or institutional knowledge.

Where systemic entropy lives

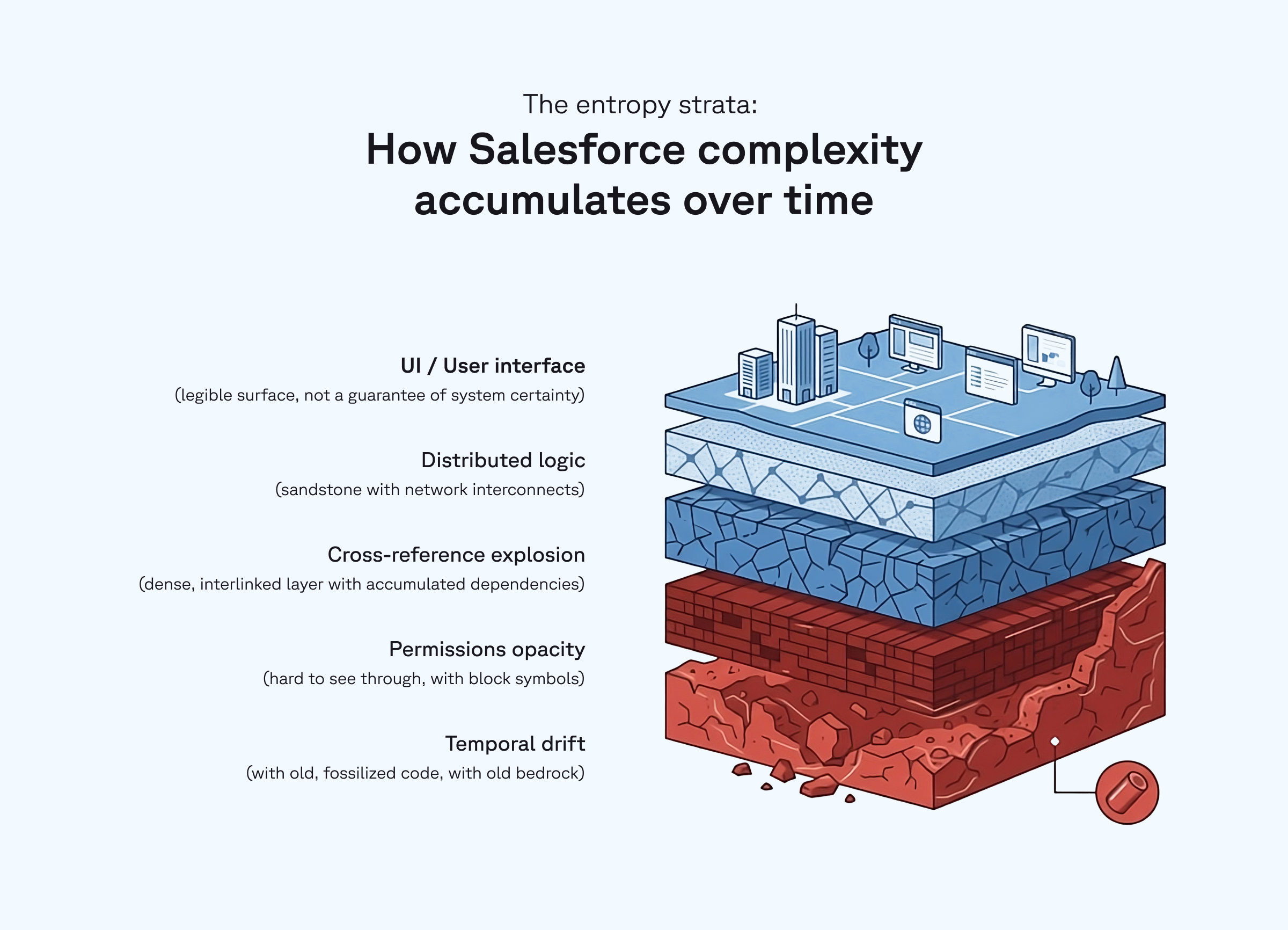

Seven recurring system behaviors — what we call Entropy Drivers — explain nearly all high-entropy outcomes observed in this research:

- Permissions Opacity: Access rights computed at runtime with no single authoritative view

- Fragmented Authority: Behavior governed by multiple mechanisms without clear precedence

- Distributed Logic: Business rules spread across flows, code, and validations

- Cross-Reference Explosion: Answers requiring traversal of many objects and dependencies

- Code Opacity: Imperative logic that obscures intent and downstream effects

- Namespace Ambiguity: Unclear boundaries between managed and custom components

- Temporal Drift: Logic whose purpose has been lost to history

Clearly, as the graph below indicates, high-entropy interactions are not random: rather, they occur where these drivers compound… most notably at permission boundaries and usage attribution surfaces.

Implications for AI and autonomy

A common assumption in enterprise AI adoption is that risk scales with system complexity. This research suggests a more precise framing:

AI risk scales with epistemic uncertainty, not technical sophistication.

Agents do not fail everywhere.

They fail predictably, at boundaries where authority is fragmented and truth is computed dynamically.

One implication of this research is that tools which make high-entropy domains — particularly permissions — legible in real time can dramatically reduce operational risk without restricting autonomy elsewhere.

This insight enables a pragmatic governance strategy. Rather than attempting to make agents universally autonomous, organizations can reduce risk immediately by classifying interactions by entropy before execution. In practice, routing high-entropy queries (especially permissions and access questions) to mandatory human review would remove a disproportionate share of risk from autonomous workflows without slowing day-to-day operations.

Why this matters now

As Salesforce evolves from a system of record into a system of action, entropy becomes more than an operational nuisance. It becomes a governance, safety, and trust concern.

The Salesforce Entropy Index provides a framework for identifying where certainty collapses before agents act, changes are deployed, or decisions are automated.

This inaugural report establishes the methodology and validates the signal. Future editions will expand the dataset, refine benchmarks, and track how entropy shifts as organizations modernize.

Entropy cannot be eliminated. But it can be measured, managed, and designed around.

Appendix A: Methodology

How the Salesforce Entropy Index Is Measured

The Salesforce Entropy Index is designed to measure system comprehensibility — how difficult it is for humans and AI agents to confidently determine what a Salesforce system will do, given a specific question or change.

It does not measure:

- Code quality

- Admin skill

- Architectural “correctness”

- Organizational maturity

Entropy, as defined here, is a property of the system itself.

Scope and data source

This report analyzes anonymized AI agent interactions — conducted by Sweep using interactions from Sweep's metadata agents — drawn from real enterprise Salesforce environments. Each interaction represents a discrete, well-scoped question posed to an AI agent about Salesforce behavior, configuration, or impact.

Examples include:

- Explaining what a Flow does

- Identifying which automations update a field

- Auditing where logic is defined

- Determining whether a user can perform an action

Each interaction includes:

- The user’s question

- The agent’s response

- A confidence score reflecting answer quality and completeness

No customer names, org identifiers, or proprietary data were retained. The focus is on patterns of interaction, not individual environments. An AI agent was chosen as the analysis lens because it removes human compensation behaviors — e.g. intuition, folklore, and workarounds —a nd surfaces where system truth is explicitly knowable versus implicitly inferred.

What counts as an interaction

For the purposes of this index:

- One interaction = one scored data point

- Follow-up clarifications are not counted as separate interactions

- Near-duplicate questions are deduplicated when intent is materially identical

This ensures the index measures question difficulty, not conversational persistence.

The entropy scoring model

Each interaction is assigned an Entropy Score from 1 to 5, based on observable outcomes and underlying system properties.

Score

Entropy Level Descriptions

1: Very Low

Single metadata source, deterministic behavior, high confidence

2: Low

Limited cross-references, predictable outcomes

3: Medium

Requires synthesis across multiple metadata surfaces

4: High

Fragmented authority, incomplete visibility, partial failure

5: Very High

Runtime computation required, no single source of truth

Importantly, Score 5 is not simply “more complex” than Score 4. It represents a qualitative boundary where behavior cannot be conclusively determined through metadata alone.

Entropy drivers

Entropy scores are not assigned arbitrarily. They are explained by the presence and interaction of seven recurring system behaviors, again, the Entropy Drivers.

These drivers are systemic as opposed to situational. High entropy emerges when multiple drivers compound, particularly at boundaries involving access, authority, and usage attribution.

What this index measures (and what it does not)

The Salesforce Entropy Index measures epistemic certainty:

- Can the system’s behavior be confidently known?

- Can dependencies be enumerated?

- Can authority be conclusively determined?

It does not measure:

- Whether behavior is “good” or “bad”

- Whether logic should exist

- Whether the system is functioning as designed

A high-entropy system may be operating exactly as intended, yet still be unsafe for autonomous action.

Interpreting the results

This report represents an initial benchmark, not a final statistical model. The goal is to identify structural patterns, not universal percentages.

Consistency of results across diverse interactions is treated as more meaningful than raw volume. Where patterns persist across expanded samples, they are treated as directionally validated.

Future editions of the Salesforce Entropy Index will expand the dataset and refine benchmarks, but the core methodology will remain stable to preserve comparability over time.

Appendix B: The Findings

What the Salesforce Entropy Index Reveals

Based on analysis of hundreds of anonymized AI agent interactions across real enterprise Salesforce environments, several clear patterns emerge. These findings are consistent across multiple data expansions and remain stable as the dataset grows.

1. Entropy is not evenly distributed

Most Salesforce questions do not fall at the extremes.

The majority of interactions cluster in the Medium Entropy range (Score 3). These questions typically involve multiple metadata surfaces (Flows, fields, validations, reports, or Apex) but remain legible. Both humans and AI agents can reason about them with high confidence, even if the process is time-consuming.

Low entropy interactions (Scores 1–2) exist, but are relatively rare in enterprise orgs due to accumulated customization and scale.

Very high entropy interactions (Scores 4–5) are also rare but disproportionately risky.

2. Permissions are a qualitatively different risk domain

Across all data analyzed, every interaction scoring Very High entropy (Score 5) involved access, permissions, or authority boundaries.

These include questions such as:

- Who can edit or delete a field

- Whether a user can perform an action

- Whether logic applies to a specific role or profile

- Whether usage or behavior can be attributed to a user group

These interactions aren’t failing due to their level of complexity, but because truth is computed at runtime and authority is fragmented across profiles, permission sets, sharing rules, and execution context.

No explanation, audit, dependency, optimization, or design question exceeded Medium entropy unless access or authority was involved.

This indicates a categorical distinction, not a gradual slope of complexity.

3. Complexity is not the same as entropy

Many interactions involved complex systems:

- Deeply nested Flows

- Large CPQ implementations

- Multiple Apex triggers

- Interconnected reporting logic

In isolation, these factors did not produce high entropy.

Entropy rises when:

- Logic is split across multiple governing mechanisms

- Authority is ambiguous

- Dependencies cannot be conclusively enumerated

- Intent has been lost to historical drift

In other words, entropy reflects uncertainty, not sophistication.

4. Usage and Attribution Questions Introduce Hidden Entropy

Questions about usage — such as whether a field, dashboard, or integration is actively used— appear simple, but frequently exhibit high entropy.

These questions often depend on:

- Access to execution context

- Instrumentation that does not exist in metadata

- Historical or behavioral data outside configuration

As a result, usage and cleanup decisions often carry more uncertainty than design or explanation work, despite appearing lower risk.

5. High entropy predicts where autonomy breaks

AI agents do not fail randomly either.

High entropy interactions are predictable and cluster around:

- Permissions

- Authority boundaries

- Usage attribution

- Runtime-dependent behavior

This enables a practical governance insight:

Routing high-entropy queries — especially permissions-related ones—to mandatory human review would immediately remove a disproportionate share of risk from autonomous workflows.

Rather than limiting AI broadly, organizations can constrain autonomy surgically, where uncertainty is provably highest.

6. Entropy is a system property, not a team failure

High entropy does not imply:

- Poor admin practices

- Bad architecture

- Negligence or misconfiguration

It reflects how enterprise systems evolve over time — through growth, customization, turnover, and integration.

Entropy accumulates silently. AI agents simply make it visible.

What this means

The Salesforce Entropy Index shows that AI readiness is not a question of ambition or tooling. It is a question of where certainty collapses. Organizations that measure and manage entropy can:

- Enable AI safely where systems are legible

- Apply human judgment where systems are opaque

- Reduce risk without slowing progress