Everyone's talking about AI at work. So, we asked 1,000 people (at companies larger than 50 people) what it's actually like!

As it turns out, the gap between the hype and the reality is... quite vast, to say the least.

While companies pour billions into AI, most workers are somewhere between skeptical and queasy. Two-thirds don't trust AI with decisions that affect them. One-third have put company secrets into ChatGPT. And more than half work at companies that abandoned AI projects this year.

Here's what we found:

The Numbers That Matter:

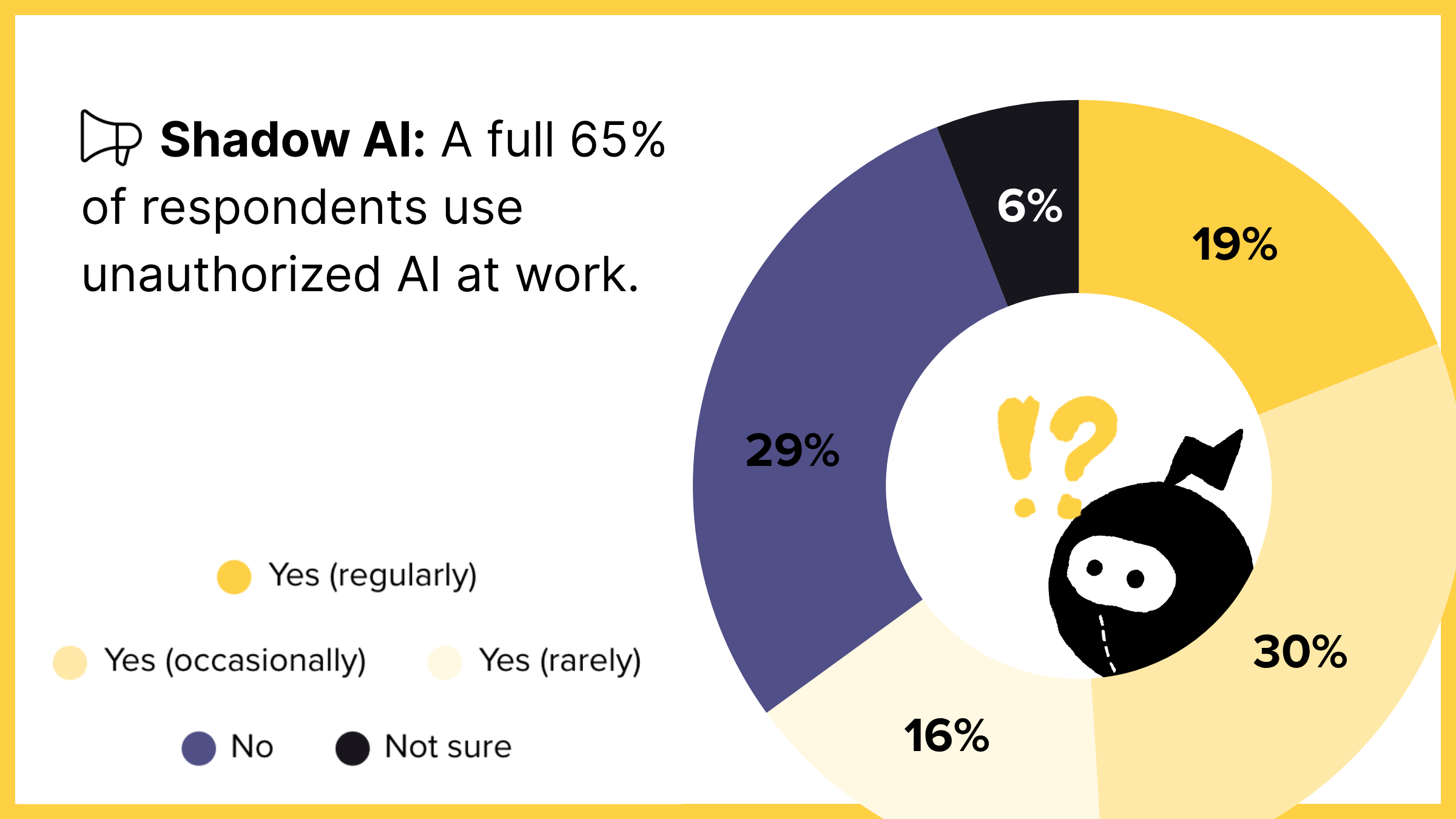

Shadow AI is everywhere. Period.

- 65% use unauthorized AI tools at work

- 30% have fed sensitive company data into ChatGPT

- Retail workers are the worst offenders: 72% using shadow AI

Nobody trusts this yet.

- Only 31% would trust AI with decisions that affect them

- 23% regularly encounter AI decisions nobody can explain

- Two-thirds are either skeptical or flat-out won't trust it

Most AI projects fail.

- 56% of companies totally abandoned projects this year

- Executives admit 37% of AI budget goes nowhere

- Only 10% of companies have high success rates

The foundation is quicksand.

- 22% don't know what data their AI actually uses

- 44% say less than half their projects succeed

- Asked what they'd do differently? 23% said restart “with better data" — by far the top answer

Nobody's actually in charge.

- 23% say no one owns AI (or they don't know who does)

- 57% have vague or nonexistent AI policies

- The #1 reason for failure? "Too expensive with unclear ROI"

Here's what this means:

The good news: AI works!

The bad news: Most companies are building it on foundations they don't understand, with no clear ownership, creating security risks and lighting money on fire.

Methodology

Survey Details:

- Sample Size: 1,000 respondents

- Survey Period: October 2025

- Screening Criteria: Full-time employees at companies with 50+ employees actively using, piloting, or planning AI initiatives

- Survey Length: 30 questions, 7-10 minutes

- Distribution: Representative mix across company sizes, industries, and roles

Demographic Breakdown:

By Role:

- Individual Contributors/Staff: 64%

- Manager/Team Lead: 20%

- VP/Director level: 12%

- C-Suite/Executive Leadership: 4%

By Company Size:

- 50-250 employees: 27%

- 251-1,000 employees: 26%

- 1,001-5,000 employees: 26%

- 5,001+ employees: 21%

By Industry:

- Technology/SaaS: 25%

- Financial Services: 16%

- Professional Services: 14%

- Healthcare: 13%

- Retail/E-commerce: 11%

- Manufacturing: 10%

- Other: 11%

AI Adoption Stage:

- Actively using AI in production: 63%

- Piloting/testing AI: 27%

- Planning AI initiatives: 10%

PART 1: The Confidence Crisis

We asked workers to describe AI at their workplace in one word. The results tell you everything:

The Top 10:

- Skeptical (15%)

- Cautious (14%)

- Curious (13%)

- Uneasy (12%)

- Hopeful (11%)

- Overwhelmed (9%)

- Optimistic (8%)

- Guarded (6%)

- Energized (6%)

- Excited (6%)

Notice what's missing? Words like "confident," "transformative," or "revolutionary."

The top four — skeptical, cautious, curious, uneasy — accounted for 54% of responses. People aren't leaping out of their seats for AI. They're wary. They're still very much waiting to be convinced.

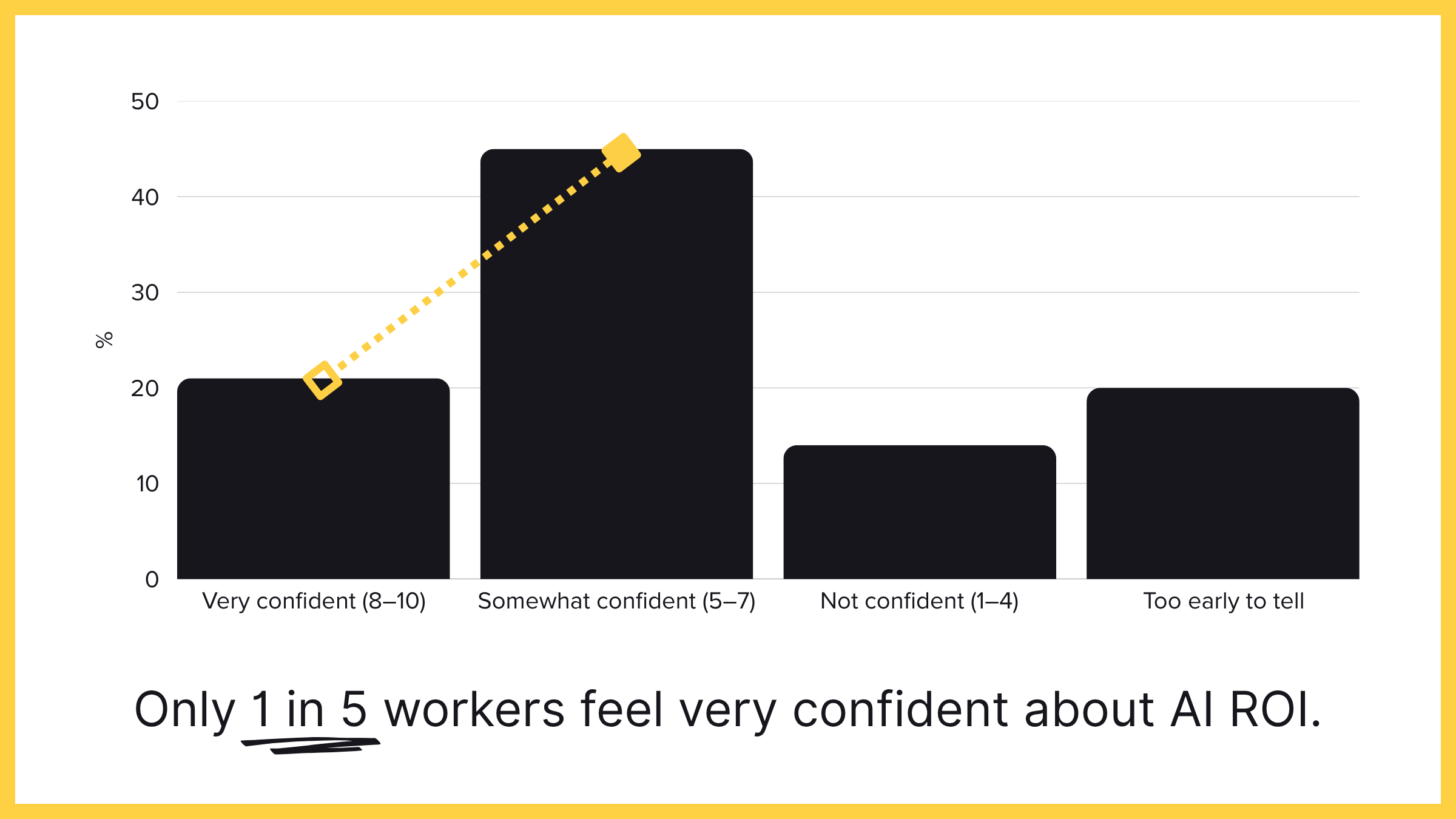

The ROI Question Nobody Wants to Answer

How confident are people that AI will actually deliver ROI?

- Very confident (8-10): 21%

- Somewhat confident (5-7): 45%

- Not confident (1-4): 14%

- Too early to tell: 20%

Only 1 in 5 workers are genuinely confident. Almost half are in the mushy middle — not convinced, but not ready to abandon ship. And 20% are still in the "let's wait and see" phase.

The AI pitch has been revolutionary transformation. The reality? Cautious optimism at best.

The Trust Problem

Here's the question: Would you trust AI to make decisions that directly affect you — your compensation, your performance review, your work assignments?

- Yes, definitely: 9%

- Probably: 22%

- Unsure: 34%

- Probably not: 24%

- Definitely not: 11%

68% won't trust it or don't know if they trust it. Only 9% trust it completely.

This is the problem underneath all the other problems. You can't scale AI adoption when two-thirds of your workforce doesn't trust it.

What Actually Worries People

Everyone's top concerns:

- Privacy and data security (25%)

- Cost without clear value (19%)

- AI making incorrect decisions (18%)

- Over-reliance on AI (9%)

- Can't understand how AI works (8%)

- AI bias or unfairness (7%)

- Job security/AI replacing workers (7%)

Notice what's not at the top? Job loss. Only 7% currently say they’re worried about being replaced. People aren't afraid of AI taking their jobs, they seem to be more afraid of AI not working, costing too much, and making bad decisions they can't understand or fix.

The split between executives and everyone else:

Executives worry most about security (29%), incorrect decisions (24%), and cost (24%).

Non-executives worry about the same things, in roughly the same order.

A shockingly rare moment of alignment: everyone is seeing the same problems.

PART 2: The Productivity Paradox

Has AI actually made work easier? Here's what people said:

- Much easier: 17%

- Somewhat easier: 47%

- No real change: 24%

- Somewhat harder: 11%

- Much harder: 1%

So 64% say AI made work easier. But only 17% say much easier.

Not quite the revolutionary productivity transformation we were promised. More like… an incremental improvement. (And worth noting, for a third of workers, it's either nothing or actively worse.)

How Much Time AI Actually Saves

- Saves 5+ hours per week: 21%

- Saves 1-5 hours: 47%

- Saves less than 1 hour: 13%

- Breaks even: 14%

- Costs me time: 5%

The winners (21%) save real time. They're getting 5+ hours back every week — a full workday.

The majority (47%) get modest gains. An hour or two here and there. Nice, but not transformative.

The problem cases (32%) see little to no benefit. And that 5% who say AI actually costs them time? They're spending more time fixing AI mistakes than AI saves them.

That's a failure mode, not a feature.

Does AI Do What It Said It Would?

"AI at my company delivers on its promises..."

- Always or almost always: 10%

- Usually: 28%

- About half the time: 33%

- Rarely: 13%

- Never: 3%

- Too early to tell: 14%

Only 10% say AI consistently delivers. A third experience coin-flip reliability—it works "about half the time."

Inconsistent results kill confidence faster than outright failure. At least failure is predictable.

PART 3: The Failure Problem

What percentage of AI projects at your company are actually successful?

- 75-100%: 10%

- 50-74%: 32%

- 25-49%: 31%

- Less than 25%: 13%

- Don't know: 15%

Let's be clear: only 1 in 10 companies have high success rates.

Nearly half (44%) say less than half their projects succeed. And 15% don't know their success rate — which should tell you something about how seriously they're measuring this.

Most companies are below a 50% success rate. (Arguably, just a form of expensive trial and error.)

How Many Projects Get Abandoned?

In the past 12 months, has your company killed any AI initiatives?

- Yes, 1-2 projects: 37%

- Yes, 3-5 projects: 14%

- Yes, more than 5 projects: 6%

- No: 31%

- Don't know: 13%

56% abandoned at least one project. More than half.

20% abandoned three or more. That's not bad luck. That's a pattern.

Only 31% made it through the year without abandoning anything.

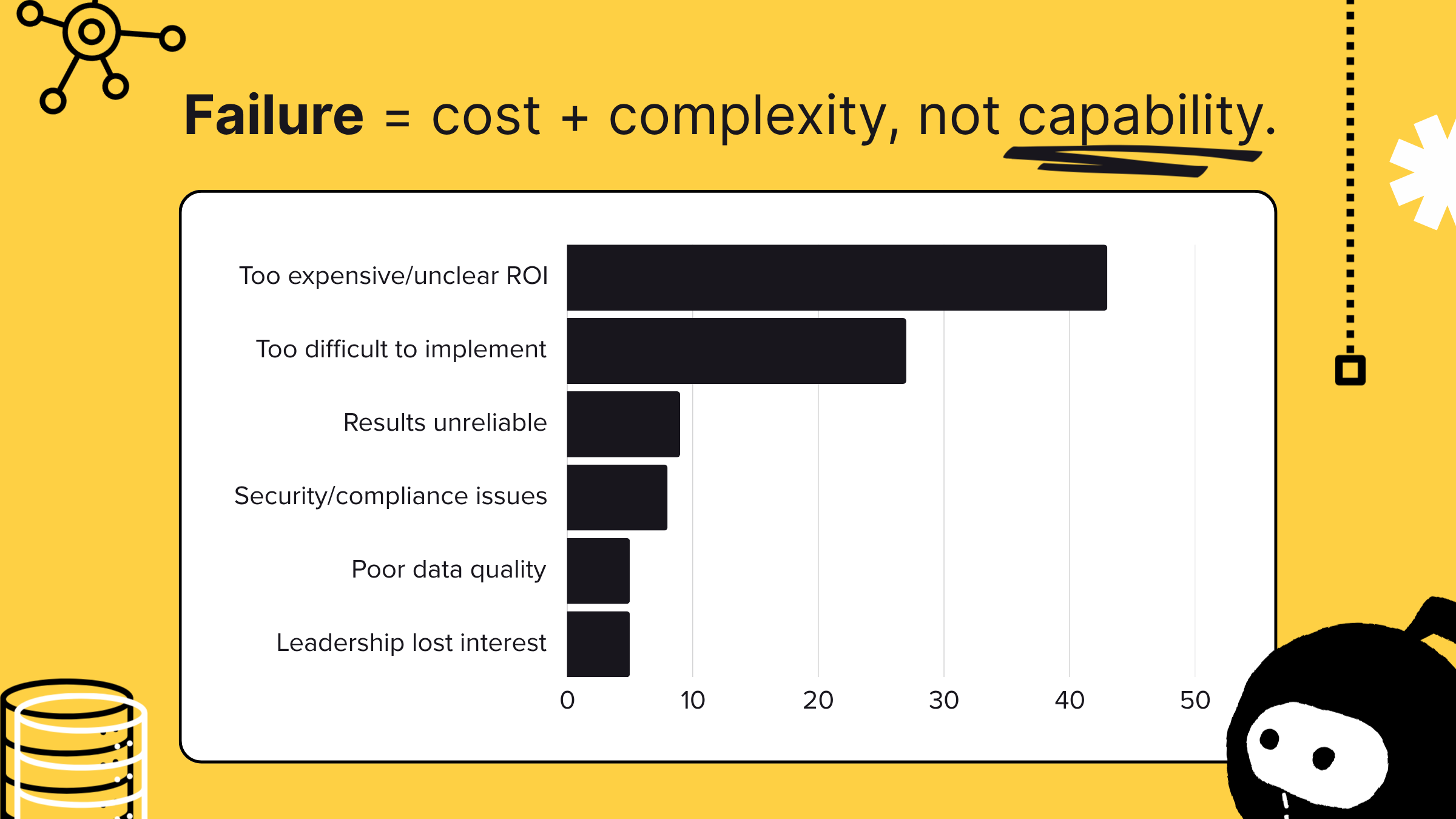

Why AI Programs Fail

For the projects that got killed, what was the main reason?

- Too expensive/unclear ROI: 43%

- Too difficult to implement: 27%

- Results weren't reliable: 9%

- Security/compliance concerns: 8%

- Poor data quality: 5%

- Leadership lost interest: 5%

Notice the top two: cost and complexity. Not capability—implementation.

The tech works. The business case doesn't, or the implementation is too hard.

"Poor data quality" ranks fifth at 5%. But here's the thing: when we asked what people would do differently if they could restart, 23% said "start with better data quality"—by far the #1 answer.

So either people didn't realize data was the problem until later, or they're reluctant to blame the foundation when the symptom is "too expensive" or "too hard."

The Restart Question

If you could restart your company's AI journey from scratch, what would you do differently?

- Start with better data quality: 23%

- Choose simpler use cases first: 15%

- Understand our existing systems first: 15%

- Set up better governance: 12%

- Better training for users: 10%

23% would fix the foundation first. That's nearly 1 in 4 people saying "we built on quicksand."

Second and third place—simpler use cases and understanding existing systems—are really the same thing. It's people saying "we ran before we could walk."

This is the lesson hiding in plain sight: You can't skip the boring infrastructure work and jump straight to the exciting AI stuff. The foundation determines everything else.

PART 4: The Black Box Problem

Can you actually explain what AI does? When AI makes a mistake, can you figure out WHY?

- Yes, usually: 16%

- Sometimes: 40%

- Rarely: 21%

- Never: 8%

- Don't know: 10%

- We haven't had AI mistakes: 5% (sure, okay)

Only 16% can usually trace mistakes back to causes.

60% can only sometimes (or worse) figure it out. That means more than half the time, AI fails and nobody knows why.

You can't fix what you can't understand. You can't improve what you can't explain.

How Often This Happens

How often do you encounter AI decisions that nobody at your company can explain?

- Never: 7%

- Rarely: 25%

- Sometimes: 44%

- Often: 19%

- Very often: 4%

23% encounter unexplainable decisions "often" or "very often." That's weekly, maybe daily. AI making consequential decisions that nobody understands.

44% hit this "sometimes"—the occasional black box moment where everyone just shrugs.

Only 7% never see it. That's the tiny minority working with actually explainable AI.

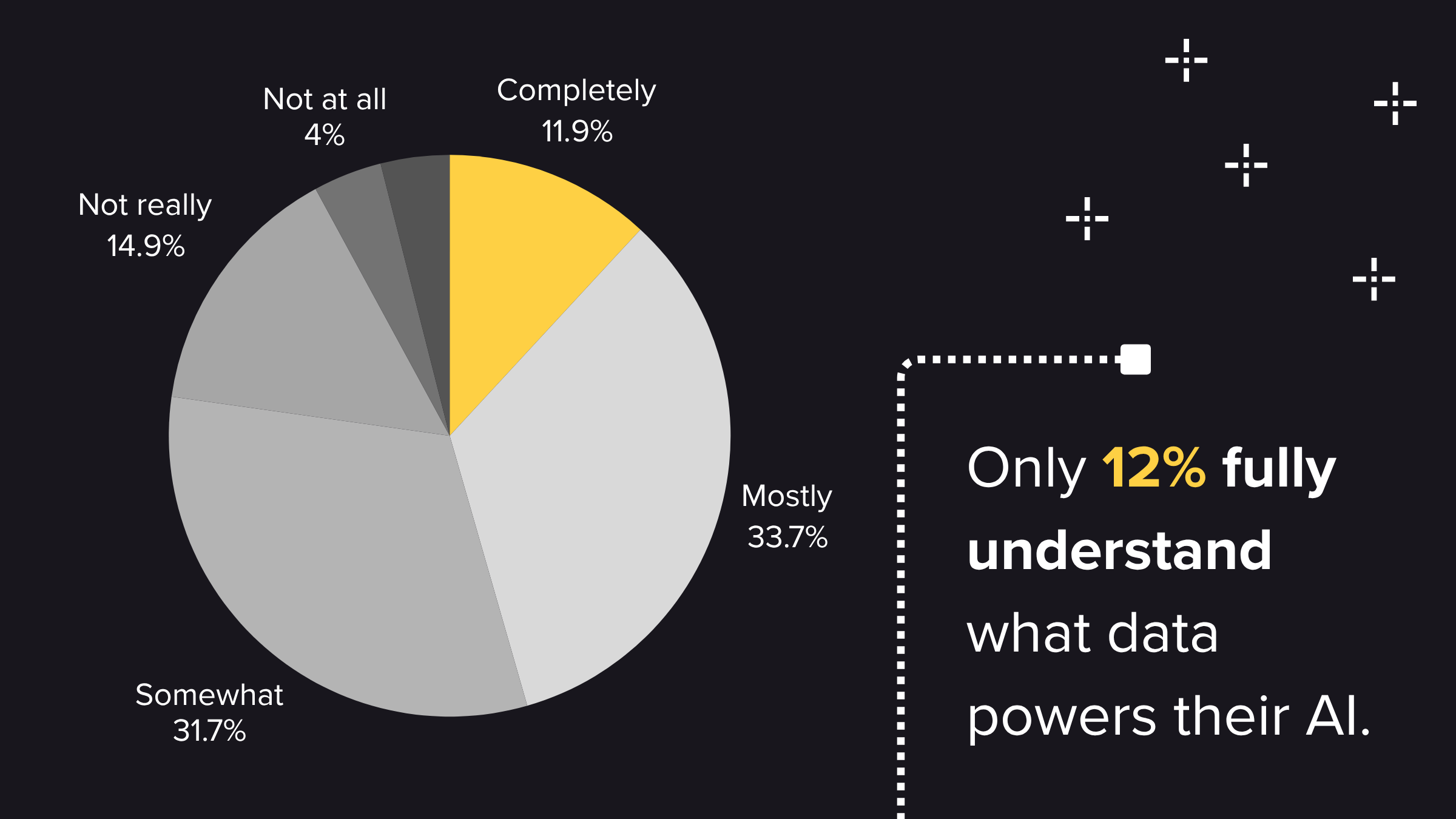

Do You Even Know What Data You're Using?

Does your company understand what data its AI systems are using?

- Yes, completely: 12%

- Yes, mostly: 34%

- Somewhat: 32%

- Not really: 15%

- Not at all: 4%

- Don't know: 4%

Only 12% have complete visibility. 22% have poor or nonexistent understanding.

This is the quiet crisis underneath all the loud failures. You're building AI on data you don't fully understand.

Which means:

- You can't explain decisions

- You can't trust outputs

- You can't ensure compliance

- You can't fix what breaks

The black box isn't the AI model. It's the data feeding it.

PART 5: The 'Shadow AI' Crisis

Hey. This is the part that should worry you.

How Many People Use Unauthorized AI?

Do you use AI tools at work that aren't officially approved?

- Yes, regularly: 19%

- Yes, occasionally: 30%

- Yes, but rarely: 16%

- No: 29%

- Not sure if they're approved: 6%

65% admit using unauthorized AI. Two-thirds of your workforce is operating outside official channels. Sounds… systemic.

Shadow AI by Industry

Industries with highest shadow AI usage:

- Retail/E-commerce: 72%

- Manufacturing: 67%

- Financial Services: 66%

- Professional Services: 65%

- Technology/SaaS: 64%

- Healthcare: 57% (lowest, likely due to compliance requirements)

Worth noting: Even in highly regulated healthcare, more than half use unauthorized AI tools.

Shadow AI by Department

Departments with highest shadow AI usage:

- Operations: 72%

- IT/Technology: 68%

- Finance/HR: 64%

- Data/Analytics: 63%

- Product: 54%

- Notably, IT/Technology ranks second, the very department responsible for controlling tool usage is circumventing its own policies 68% of the time.

Why Workers Use Shadow AI

Top reasons for using unauthorized AI tools:

- They're faster: 41%

- They give better results: 33%

- Official tools don't meet my needs: 32%

- IT takes too long to approve tools: 28%

- Official tools are too complicated: 19%

- Didn't know they weren't approved: 13%

The pattern is quite clear: Workers are trying to get work done. Speed and effectiveness overtake policy. It’s not malicious, per se. Perhaps, it’s the result of a stressed out, overworked workforce?

The Sensitive Data Problem

Have you ever put sensitive company info into public AI tools (ChatGPT, Claude, Gemini)?

- No, never: 58%

- Yes, once or twice: 23%

- Not sure what counts as "sensitive": 12%

- Yes, multiple times: 7%

30% have *knowingly* put sensitive data into public AI tools. An additional 12% aren't sure what counts as sensitive — suggesting the actual number may be higher. (It’s probably way higher.)

What this means:

- Proprietary information has been exposed to public systems

- Potential data breaches and IP leakage

- Compliance violations in regulated industries

- Training data for competitors' AI systems

Policy Clarity: The Governance Gap

Does your company have clear policies about which AI tools you can/cannot use?

- Yes, very clear: 19%

- Somewhat clear: 33%

- Not very clear: 23%

- No policies that I know of: 17%

- Don't know: 9%

Only 19% report "very clear" policies. 49% have unclear, absent, or unknown policie s— creating an environment where Shadow AI thrives.

PART 6: The Accountability Gap

Who's in charge here, anyway? Who is primarily responsible for AI at your company?

- IT Department: 26%

- Executive leadership: 19%

- Data/Analytics team: 17%

- Dedicated AI team or Chief AI Officer: 16%

- Nobody/unclear: 14%

- Don't know: 8%

23% say nobody is clearly responsible or don't know who is. Even among those who identified someone, there's fragmentation. IT, executives, data teams, and AI teams all claim ownership, suggesting unclear accountability.

Executive Budget Waste Estimates

Executives were asked: What percentage of your AI budget would you estimate isn't delivering value?

- 0-10% wasted: 17%

- 11-25% wasted: 21%

- 26-50% wasted: 36%

- 51-75% wasted: 17%

- 76-100% wasted: 10%

Average estimated waste: 37% of AI budget

62% of executives admit that more than one-quarter of their AI spending delivers no value. At scale, this represents billions in wasted investment.

The Leadership Understanding Gap

Non-executives were asked: Does your leadership understand the day-to-day reality of working with AI tools?

- Yes, definitely: 16%

- Somewhat: 41%

- Not really: 28%

- Not at all: 9%

- Don't know: 6%

Only 16% strongly agree that leadership understands the reality. 37% say leadership doesn't really understand or doesn't understand at all (and that’s a significant disconnect between strategy and execution).

PART 7: What would actually help here

Building Confidence

What would make you MORE confident in AI at your workplace? (Top 2 selections)

- Seeing actual results/ROI: 39%

- Better transparency about how it works: 32%

- More training and support: 30%

- Human oversight on AI decisions: 25%

- Understanding our data better: 25%

- Clearer policies and rules: 24%

- Better tools that actually work: 22%

- Nothing - already confident: 2%

The top answer — "seeing actual results" — reflects more of that show-me-the-money skepticism. Workers want the proof, not the promises.

The second and third answers reveal the transparency and training gaps — people can't be confident in what they don't understand.

Notably, "understanding our data better" ranks fifth at 25% — a quarter of workers recognize the data foundation problem.

If They Could Start Over

If you could restart your company's AI journey, what would you do differently?

- Start with better data quality: 23%

- Choose simpler use cases first: 15%

- Understand our existing systems first: 15%

- Set up better governance: 12%

- Better training for users: 10%

- Get more employee buy-in: 10%

- Hire experts instead of building in-house: 10%

- Nothing - we're on the right track: 3%

The wisdom of experience: 23% would prioritize data quality — far ahead of any other choice. Combined with "understand our existing systems first" (15%), 38% would focus on the foundation before building.

PART 8: The Future Outlook

One Year From Now

One year from now, AI at your company will be:

- Much more valuable: 29%

- Somewhat more valuable: 45%

- About the same: 15%

- Less valuable: 2%

- Abandoned: 1%

- Don't know: 8%

Despite all this, faith remains! A full 74% expect AI to become more valuable — optimism persists despite current challenges. However, the plurality (45%) expect only incremental improvement, not transformation.

Only 29% expect "much more valuable" outcomes, tempered expectations from experience.

Our Recommendations

For Executive Leadership

1. Establish Clear AI Ownership

Appoint a single leader responsible for AI strategy, governance, and results. This can't be distributed across IT, data, and business teams.

2. Audit Your Data Foundation

Before investing more in AI, understand what data you have, where it lives, and what it means. The #1 restart choice is "better data quality,” learn from others' mistakes.

3. Address Shadow AI Immediately

With 65% using unauthorized tools and 30% exposing sensitive data, this is a critical security risk. Create fast-track approval processes for legitimate needs while enforcing consequences for risky behavior.

4. Set Realistic Expectations

Stop promising revolutionary transformation and start delivering incremental value. The trust deficit comes from overpromising and underdelivering.

5. Measure What Matters Only 39% want to "see actual results/ROI" … give them that. Establish clear metrics, track them religiously, and report transparently.

For IT & Data Leaders

1. Make Official Tools Competitive

Workers use shadow AI because it's faster (41%) and better (33%). Your official tools need to compete on speed and effectiveness, not just compliance.

2. Accelerate Approvals

28% cite "IT takes too long to approve tools." Create fast-track processes for low-risk AI tools while maintaining governance on high-risk applications.

3. Document Your Systems

15% would "understand our existing systems first" if restarting. Invest in documentation, data lineage, and system mapping before adding more AI.

4. Build Explainability

60% can only sometimes explain AI failures. Invest in interpretability, logging, and audit trails so you can trace decisions and debug failures.

5. Create Clear Policies

57% have unclear or no AI usage policies. Publish simple, practical guidelines on what's allowed, what's not, and why.

For Business Unit Leaders

1. Start Small and Focused

15% would "choose simpler use cases first" if restarting. Don't try to boil the ocean—pick one clear problem and solve it well.

2. Invest in Training

30% want more training and support. Your team can't be confident in tools they don't understand. Budget for real training, not just webinars.

3. Demand Transparency

32% want better transparency about how AI works. Require vendors and internal teams to explain AI decision-making in plain language.

4. Keep Humans in the Loop 25% want human oversight on AI decisions. AI should augment, not replace, human judgment — especially for high-stakes decisions.

5. Measure and Iterate

Only 10% say AI consistently delivers. Treat AI like any other tool: measure results, learn from failures, and iterate.

Wrapping it all up

The Big AI at Work Study reveals a technology at an inflection point. AI works. A full 64% report it makes work easier, and 74% expect it to become more valuable. But the gap between promise and reality is QUITE substantial.

The Core Problems:

Foundation Issues: Organizations built AI on data they don't understand

Security Risks: Shadow AI creates massive exposure

Trust Deficit: Workers don't trust AI with consequential decisions

Accountability Gaps: Nobody clearly owns AI outcomes

Unrealistic Expectations: Overpromising leads to underdelivering

The Path Forward:

The 23% who would "start with better data quality" understand the core lesson: you can't build reliable AI on an unreliable foundation. Organizations need to:

- Pause and assess their data foundation

- Establish clear ownership and governance

- Address shadow AI security risks

- Set realistic expectations and measure results

- Build trust through transparency and results

The organizations that succeed won't be those with the most sophisticated AI — they'll be the ones who build AI on solid foundations, govern it effectively, and deploy it where it actually creates value.

The AI revolution is real. But it certainly requires getting the fundamentals right first.

About this study

The Big AI at Work Study was conducted by Sweep in October 2025 to understand the real-world state of AI adoption across industries and company sizes. The study surveyed 1,000 full-time employees at companies with 50+ employees that are actively using, piloting, or planning AI initiatives.

About Sweep

Sweep helps organizations understand and optimize their technology infrastructure through AI-powered metadata management. Our platform provides visibility into complex systems like Salesforce, enabling companies to build AI on solid data foundations.

For more information or to discuss these findings, contact us at nickg@sweep.io

Note on Methodology: This report presents aggregated, anonymized data from survey responses. Individual responses were collected confidentially, and no identifying information is included in this report.